Spherical-graph based weather prediction

[Article: GraphCast: AI model for faster and more accurate global weather forecasting]

Summary by Adrian Wilkins-Caruana

[The original DeepMind article]

Last week, we discussed two amazing mathematics models from Google Deepmind: AlphaProof and AlphaGeometry 2. (Definitely give that article a read if you haven’t!) Today we’ll take a look at an interesting weather-prediction model called GraphCast, which Google DeepMind released last year. Google’s press release says that their method can predict weather conditions up to 10 days in advance faster and more accurately than an industry-standard weather simulation system. Let’s explore how systems like these work, and then discuss how and why an AI-based approach might be better.

Meteorologists predict the weather by plugging information about what the weather is now — temperature, humidity, air pressure, etc. — into carefully designed physics equations that calculate what the weather will be in the future. This approach is called numerical weather prediction (NWP), and it’s used by the gold-standard NWP method called high resolution forecast (HRES). Despite how truly incredible NWP methods are, they’re not perfect. I’m not an NWP expert, but I would guess that tiny errors in weather measurements or slight differences between the equations’ model and the real-world might be some examples of NWP’s limitations.

The Google DeepMind researchers think that AI-based methods may be suitable here, since they might be able to learn the complicated relationship between past and future weather observations. So the researchers designed GraphCast, a model that uses a graph neural network (GNN) to model weather. GNNs are actually quite similar to the common Transformer neural network architecture. The “graph” part of GNN refers to how information can flow between different parts of the NN. In a Transformer, the “graph” encodes the idea that the observed tokens affect future ones, whereas GraphCast encodes the idea that the weather at a particular location affects the weather at nearby locations. The researchers use an icosahedral mesh — a sphere-like structure composed of triangles — to represent the state of the weather around the globe. They actually use several mesh levels, as shown below. (I’ll explain how these levels are used shortly).

At each node or vertex, and at each edge in this mesh are some weights, just like in a regular neural network. As you can see in the figure above, the information at each node can be “passed” to neighboring nodes. This is where the different scales come in: they help pass information at different distances. GraphCast uses seven mesh levels, with the coarsest (M0) containing 12 vertices and the finest (M6) containing 40,962. The learned message-passing over the different meshes’ edges happens simultaneously, so that each node is updated by all of its incoming edges, as depicted by the blue arrows in the figure.

You might be wondering, “Why don’t they just use a square grid, like latitude and longitude, and pass messages along this grid?” I can think of two key advantages of the mesh approach. First, nodes on an icosahedral mesh have five neighbors, whereas nodes in a square grid only have four — so more information gets shared. But, more importantly, the nodes on the icosahedral mesh are equally spaced around the globe. On a latitude and longitude grid some nodes are closer together because the lines of latitude converge at the poles, causing the grid cells to become smaller and more distorted as you move away from the equator.

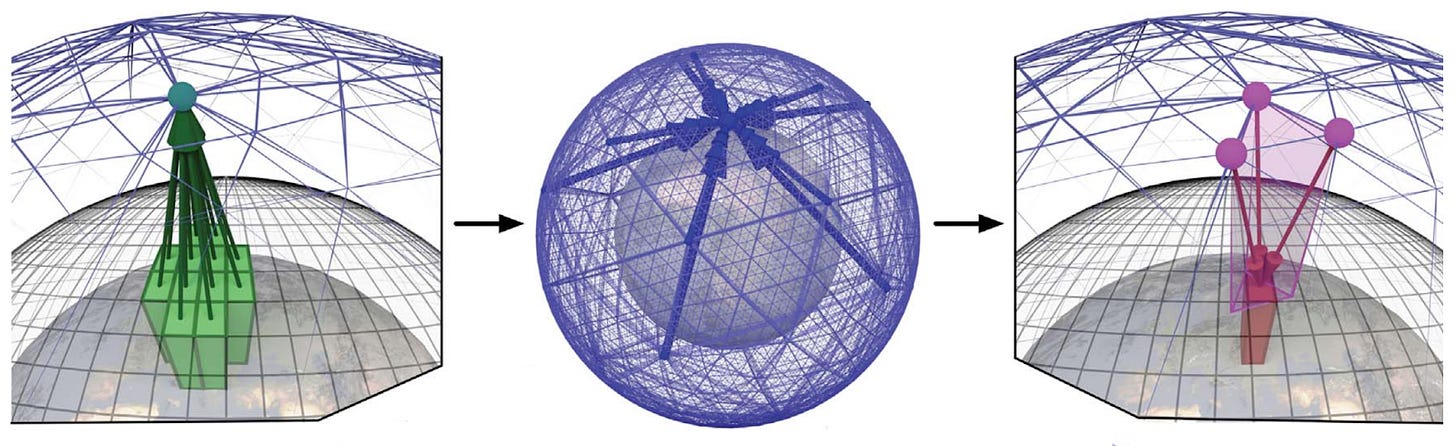

This fancy neural network with its graph and icosahedrons is really cool, but it isn’t all for show. GraphCast works in three steps: First, an encoder maps information about the current state of the weather on a typical longitude/latitude grid onto nodes in the mesh. (This info consists of five Earth-surface variables — including temperature, wind speed and direction, and mean sea-level pressure — and six atmospheric variables at each of 37 levels of altitude, including humidity, wind speed and direction, and temperature.) Then, GraphCast processes information on the mesh using the message-passing technique we just discussed. Finally, GraphCast predicts what the surface and atmospheric information will be 6 hours later. Here’s what this process looks like:

To train GraphCast, the researchers used a dataset called the ERA5 reanalysis archive. It contains decades of historical global weather maps, which are derived from vast amounts of historical weather observations that have been combined by the European Centre for Medium-Range Weather Forecasts (ECMWF) using traditional NWP. Using a bit of the data that describes what the weather was at a given time in history, and another bit from 6 hours prior, GraphCast learned to minimize the mean squared error of the weather 6 hours into the future. To predict the weather more than 6 hours into the future, the model’s output can be autoregressively fed back into itself, but the results become less reliable the more this is done.

The Google researchers say that GraphCast is more accurate than the gold-standard NWP methods used today, but in their press release they shared a great example of how a difference in weather prediction accuracy can have a real-world impact. In September of last year, Hurricane Lee made landfall in Nova Scotia. GraphCast predicted that this would happen 9 days before it did, while the best NWP systems couldn’t confidently say where Lee would make landfall until 6 days prior. I think that’s both quite an impressive feat, and also quite a meaningful difference!

The researchers have open-sourced GraphCast’s code, which you can read for yourself if you’re so inclined. Also, the ECMWF are running and publishing weather predictions from GraphCast in an experimental section of their website. On that web page, it says that GraphCast’s resolution (0.25°) isn’t fine enough to pick up on some details, and that the model doesn’t predict all the weather variables that might be of interest. Despite this, it’s great to see official groups like the ECMWF take research like this seriously. It’s amazing to think that such research can have a meaningful impact on our everyday lives.