Modified Gaussian splats for realistic face rendering

Paper: Relightable Gaussian Codec Avatars

Summary by Adrian Wilkins-Caruana

The recently invented 3d Gaussian splatting technique has disrupted the computer vision world with new ways to efficiently represent and render 3d scenes. The original method supports static (non-moving) scenes, but researchers have already extended it to handle dynamic scenes, such as moving people and avatars. So far, though, it hasn’t been possible to relight these scenes with artificial lights. The original splatting method also struggles to model fine details such as hair or eye movements, which are important for making models look realistic. Today’s paper focuses on addressing these issues so that we can animate and render realistic models of human heads like the ones below.

To understand the contributions of this paper, it’s useful to think about what kind of additional information is needed to support dynamic lighting and finer details beyond what the vanilla 3d Gaussian method can already provide. The first piece is geometric movement info for the 3d Gaussians — specifically, information about how the shape and position of the Gaussians will change based on the subject’s facial expression. The second piece is info about what the Gaussians should look like when lit from arbitrary places.

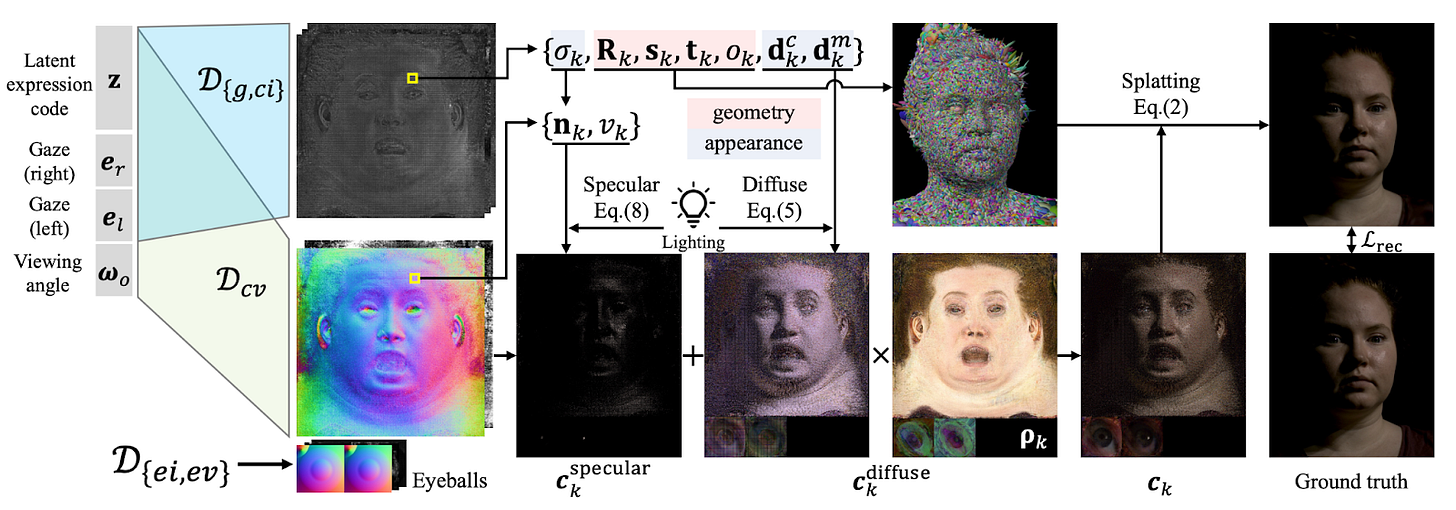

For the geometric information, a variational autoencoder determines where in 3d space each part of the face should be rendered based on the current facial expression. The model in this paper encodes coarsely tracked points on the subject’s face to generate a latent expression code, which is a dense set of numbers that represent the expression. The model includes a decoder that uses this code to determine the updated geometric Gaussian info, like position, scale, and opacity of the 3d Gaussians.

The original Gaussian splatting method models view-dependent color using something called spherical harmonics, which is a way of modeling the color of a Gaussian from different perspectives. This paper takes Gaussian coloring to the next level by modeling it as a combination of both diffuse (non-shiny) and specular (shiny) components. The diffuse components have two subcomponents that are computed independent of the camera position (one for color info, and another for monochrome info). These components determine how the Gaussians should be colored when viewed from different angles or with different facial expressions. For example, ears tend to cast shadows, and that shadow information can be captured by this diffuse color data. The model in this paper captures more monochrome data than color data since this kind of data supports more realistic renderings of subtle shadows that move as head position changes. (For those familiar with signal processing, by “more monochrome data,” I’m referring to more high-frequency detail.)

Unlike the diffuse lighting information, the specular info is computed based on camera position, and is modeled using spherical Gaussians, which are essentially Guassian distributions on the surface of a sphere. This specular information captures how light reflects off the avatar: It accounts for things like reflections, as well as more subtle, view-dependent phenomena that influence the realistic recreation of lighting effects (for those of you familiar with 3d graphics, these are occlusion, Fresnel effects, and geometric attenuation). A final decoder (again decoding the latent expression code) predicts surface roughness and view-dependent surface normals, which are necessary for computing spherical Gaussians and using them to render view-dependent lighting effects.

In addition to all of the above, the method also separately models each eye, accounting for its orientation and lighting effects such as reflections on the cornea. The whole method is summarized in the figure below. The expression information is decoded into expression-dependent splat geometry as well as UV maps, which are 2d encodings of the surface roughness, normal, specular, and diffuse information. The UV maps are combined to determine the final color of each Gaussian, which is then rendered. The parameters of the models are optimized by comparing the rendered images with a ground truth.

In quantitative tests, this method typically outperforms a pre-existing method for relightable avatars called Mixture of Volumetric Primitives (MVP). The picture below shows the results with the ground truth (far left) and MVP (far right). The two middle columns show the results with (middle left) and without (middle right) the part of the model that includes special components to individually model each eye. From afar, the methods are relatively indistinguishable. However, looking more closely, the proposed method with the explicit eye model (EEM) is substantially better at capturing reflections on the eyes and the eyebrows.

As the authors suggest, one application of this technique is to reanimate avatars, and they’ve provided some video-driven animation results, where the avatar’s facial expression mimics the expression taken from a video of someone speaking. To me, these animations seem a bit uncanny — I think it’s because the coarse geometry used to animate the avatar isn’t sufficient to convince my brain that the video I’m watching is of a real person. But, perhaps if this was improved, the method could be used to animate avatars for real-time, 3d video calls. I’ve been skeptical of such applications for some time, but the results of recent research — such as this method and another we discussed a few weeks ago — are quickly changing my mind.