Do AI models exhibit parallel evolution?

[Paper: The Platonic Representation Hypothesis]

Summary by Adrian Wilkins-Caruana

In philosophy of mind, qualia are instances of subjective, conscious experience. Some examples are our perception of the pain of a headache, the taste of wine, and the redness of a sunset. Due to the subjective nature of these experiences, we actually don’t know whether two people experience these qualia the same way — you may experience the redness of a sunset in a different way than I do, for example. Do neural networks experience qualia too? In other words, would two neural networks learn to experience the redness of an evening sky in similar ways? A group of researchers have been wondering this, too, and they have a new hypothesis: the Platonic Representation Hypothesis (PRH).

Minyoung Huh and coauthors think that neural networks do in fact experience the world similarly. The figure below states the hypothesis, and includes a diagram that conveys the idea behind it. In the figure, images (X) and text (Y) are projections of a common underlying reality (Z). The PRH conjectures that representation learning algorithms will converge on a shared representation of Z, and making models larger, as well as making data and tasks more diverse, seems to drive this convergence.

On the face of it, the PRH seems like a plausible claim, but what evidence do the researchers have for this hypothetical behavior? Here are some facts that the researchers cite:

Different models, with different architectures and objectives, can have aligned representations. For example, one study found that the layers from a model trained on the ImageNet dataset could be “stitched” together with the layers from a separate model trained on the Places-365 dataset (recognizing types of places from images), and the resulting stitched model still had good performance. This suggests the layers are data-independent and compatible with each other. Other studies show that this kind of compatibility also applies to other neural network components, like individual neurons; that is, you can find a neuron per model such that this neuron is activated on seeing the same feature in the input image, no matter which model you use.

Alignment increases with scale and performance. The researchers cited a few papers that add weight to this claim, but they also conducted an experiment of their own: They evaluated how well 78 different vision models — trained with varying architectures, training objectives, and datasets — transfer to the Visual Task Adaptation Benchmark (VTAB, which is designed to test if a visual model can perform well on tasks it wasn’t specifically trained for). They found that models that transfer well to VTAB had very similar representations, while no such similarity was found among the models that couldn’t adapt to VTAB.

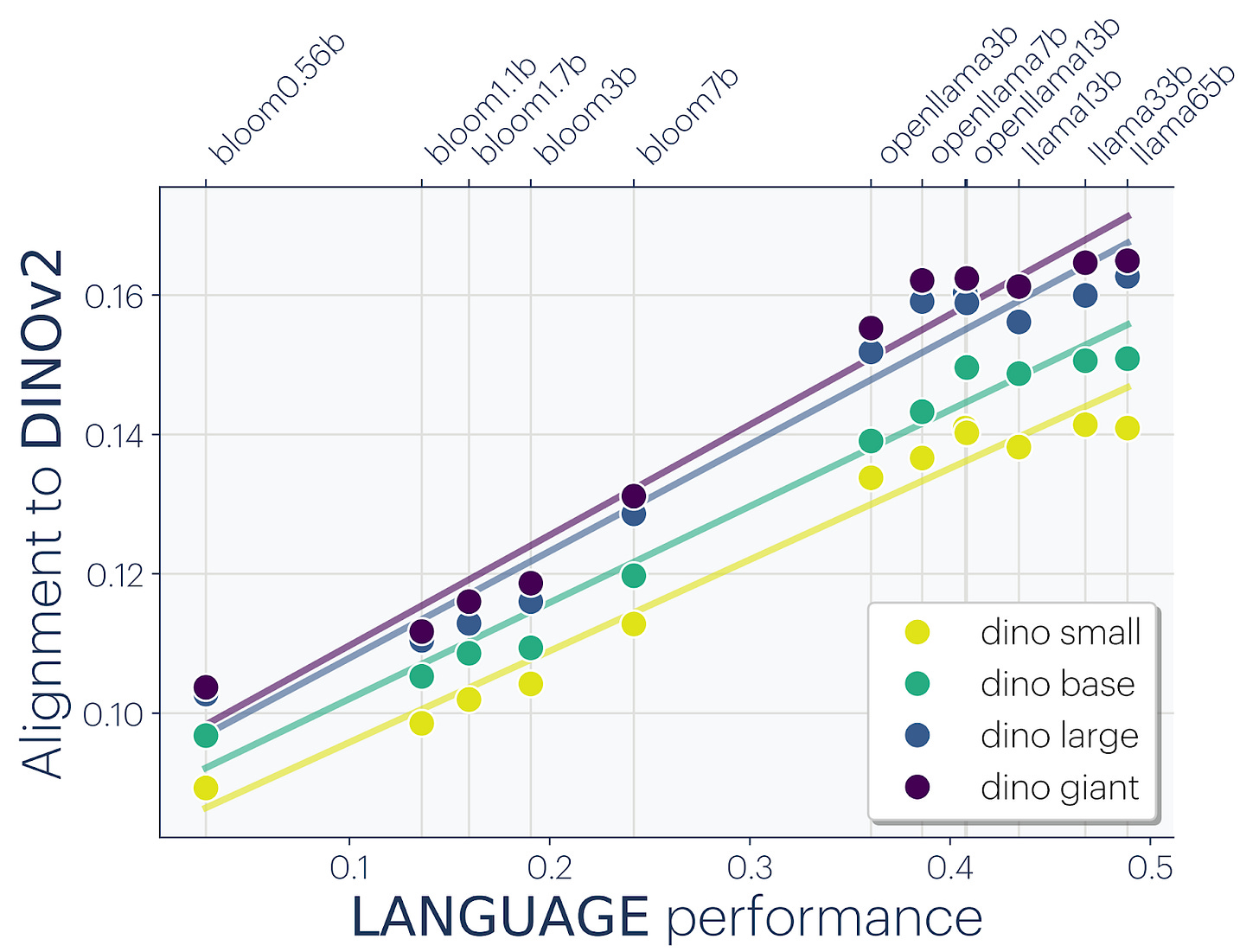

Representations are converging across modalities. This claim is also supported by several studies, but the researchers conducted their own experiments to determine whether models are indeed learning an increasingly modality-agnostic representation of the world. Using a dataset of paired images and captions, they found that the better a language model is at language modeling, the more its representations aligned with DINOv2, a vision model. These results are shown in the figure below.

Models are increasingly aligning to brains. They cited some studies to support this claim, though it’s by far the weakest of their five claims.

Alignment predicts performance on downstream tasks. The researchers found that there’s a correlation between how well language models align to DINOv2 and how well they perform on downstream tasks, like commonsense reasoning and mathematical problem solving.

Next, the researchers investigated why such representational alignment might be occurring. They present three further ideas:

The Multitask Scaling Hypothesis. Each training datapoint and objective (task) places an additional constraint on the model. As data and tasks scale, the volume of representations that satisfy these constraints must proportionately grow smaller.

The Capacity Hypothesis. Larger models and better learning objectives should be better at making models arrive at optimal solutions to problems. This idea sounds interesting, but it doesn’t really explain why the representations of optimal models should be similar.

The Simplicity Bias Hypothesis. All neural networks, even unnecessarily large ones and ones that lack regularization, tend to arrive at the simplest representations. (We actually touched on this topic last week; have a read if you missed it).

The paper goes on to discuss what kinds of representations are being converged to, and some implications of that convergence. But, before we finish up, I think it’s important to mention some counterexamples and limitations of their research:

Representations of modality-specific concepts, such as visually experiencing the beauty of a total solar eclipse, can’t be learned solely from other modalities.

Not all representations are presently converging.

The demographic bias of people creating AI models might also accidentally bias them toward similar representations.

The level of measured alignment might actually be quite small. For example, the maximum measured alignment in the DINOv2 figure above is 0.16 on a scale of 0 to 1. The researchers aren’t sure whether this is indicative of peak alignment or not.

After reading this paper, I’m not convinced that neural networks’ representations are converging. But I do think that the idea is interesting and plausible, and this paper introduces lots of different avenues for further exploration. For example, I’d love to see more investigation into methods of measuring alignment and a more comprehensive analysis of what kinds of representations align, how much alignment varies across different kinds of representations, and how alignment changes as models change.