Oh, your AI only generates 2D images? How cute.

Time for 4D!

Intrepid readers! Have you already become bored generating flat, intangible images from your text description with your stable diffusion algorithms? Have you, in fact, already become bored with your merely three-dimensional lives? Fear not! AI has already launched itself into the fourth dimension.

If you’re like me, you may be skeptical of claims about higher dimensions. When this paper says 4D, it means that it can generate an animated 3-dimensional scene from any text you give it. In my opinion, that is legitimately four dimensions. How it manages to accomplish this is elucidated below.

— Tyler & Team

Paper: Text-To-4D Dynamic Scene Generation

Summary by Adrian Wilkins-Caruana

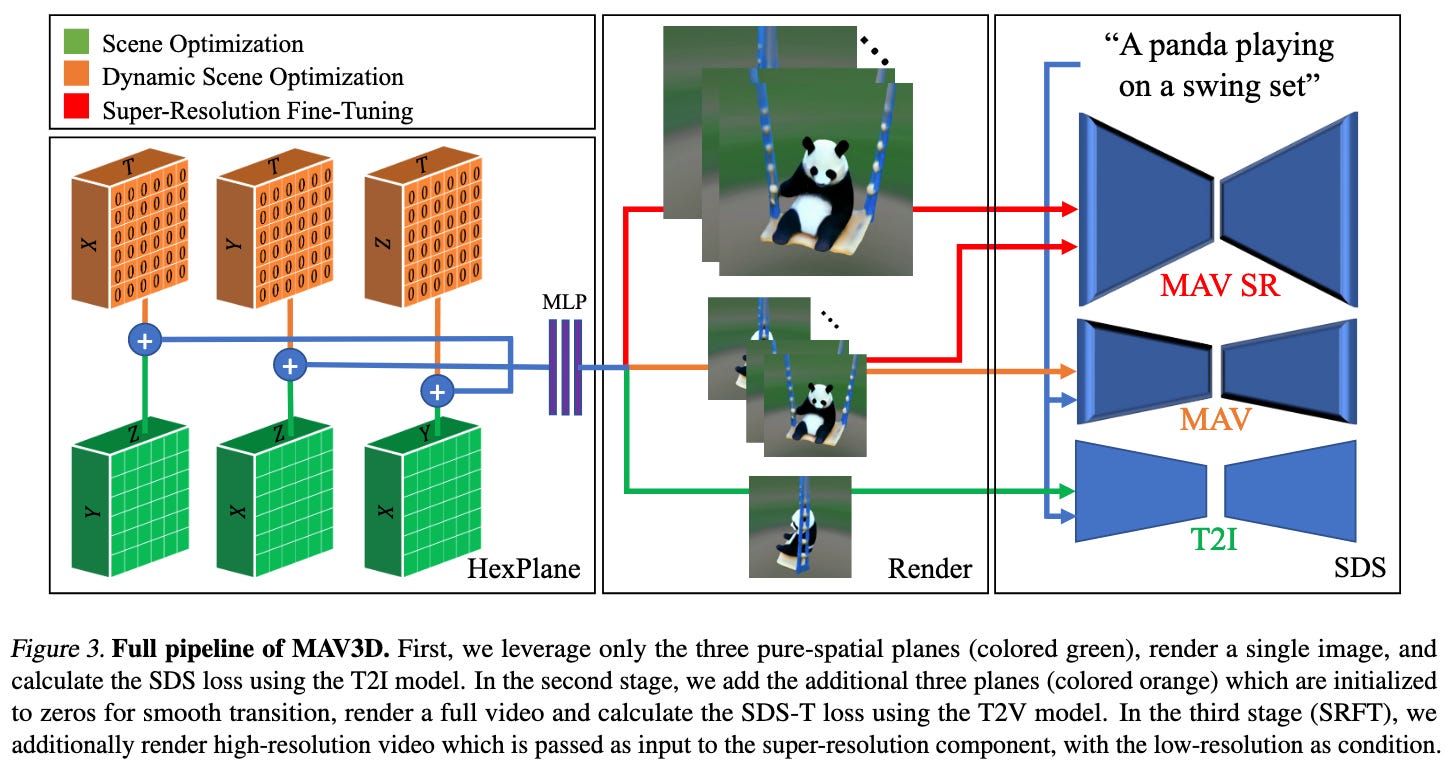

MAV3D (Make-A-Video3D) is a method for generating 3D animations from text descriptions. Remarkably, it does this without any 3D or 4D (3D + time) training data. MAV3D works by extending established text-to-image models both temporally (for text-to-video) and spatially (for text-to-scene). This approach overcomes 3 key challenges: training without 4D labels, 3D scene reconstruction, and efficiently representing 4D scenes.

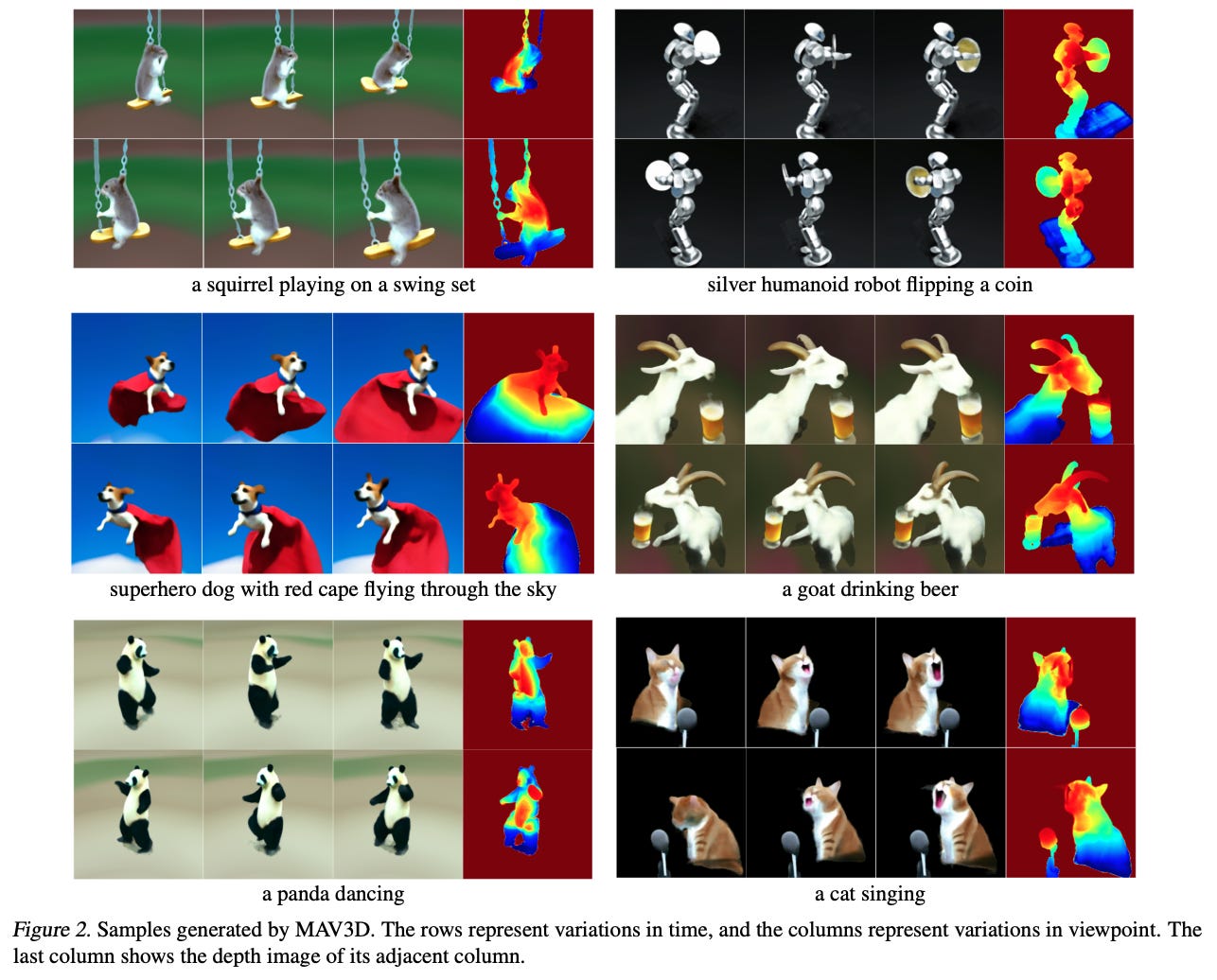

Here’s an example of MAV3D’s output:

MAV3D isn’t trained on descriptions of 4D scenes, but instead relies on generative text-to-image models trained on text-to-image data. MAV3 involves 3 main parts. First, a text-to-image model generates video frames from a text description, creating data with 2 spatial dimensions and 1 temporal dimension. Next, the video-generation process is repeated for the same text description to create more videos of the same scene, but from different viewpoints. Then, based on these videos, MAV3D uses an established 3D reconstruction model (NeRF) to figure out the 3D scene for each frame, resulting in a 4D scene!

A naive 4D generation model would require prohibitively more memory than a 2D model, so MAV3D has to get creative. While a text-to-image model uses 2D features (since images are 2D), MAV3D uses 6 sets of 2D features called feature planes to represent info across 4 dimensions. There are 3 spatial feature planes (xy, xz, and yz) and 3 temporal feature planes (xt, yt, and zt). These planes need to be much lower resolution than a text-to-image model, since there are more of them. However, 3D reconstruction from low-res video results in a low-quality 3D scene, so MAV3D’s third component also trains a super-resolution model to increase the 2D resolution of each frame to yield a high-quality 3D animation.

Here’s a diagram showing how the 6 feature planes are used to jointly optimize a text-to-image model, text-to-video model, and a super-resolution model:

And here are some MAV3D examples! Each row shows a different viewpoint, and the columns show the temporal progression. The rightmost column colors the 3D scene by depth: