LLMs can learn to use tools

An easy next step in LLM evolution: Integrating with existing systems

Did you know that corvids (such as crows and ravens) can use tools? Some have been observed using sticks, held in their beaks, to forage for food.

I’m proud to say that I, too, can use tools. I use my smart watch to obtain necessary energy supplements (aka “matcha lattes”) on a regular basis. Sometimes I use grep to search through files, and if I get a flat tire, I am quite proficient at using a tool (my phone) to fix the issue (call for help). We’re more powerful with tools.

LLMs today are like snapshots of isolated people frozen in time and space. They don’t learn beyond their training phase, and they don’t have the ability to perform internet searches on your behalf. If you think of an LLM as your assistant, then it would be nice if you let your assistant perform Google searches, use a calculator, or add things to your calendar. In this way, an already-magical LLM adds a few new tricks to its repertoire.

We’ll discuss two papers:

The Toolformer paper, which focuses on teaching LLMs to organically use tools as part of its natural text generation. I see this as a low-level approach that can make an LLM fundamentally more capable, and less likely to make factual errors.

The ReAct paper, which outlines a higher-level reasoning-oriented loop to help an LLM work through more difficult challenges.

— Tyler & Team

Paper: Toolformer: Language Models Can Teach Themselves to Use Tools

Summary by Adrian Wilkins-Caruana

Like many people, LLMs are bad at arithmetic. But, also like people, they can do well if you give them a calculator. For example, if an LLM needs to say “the square root of 64 is …,” instead of predicting the next token as “8,” the LLM can learn to say “[Calculator(sqrt(64))]” instead. Enter Toolformer, an LLM created by Schick et al. They’ve proven that Toolformer can learn when to use different tools (such as a calculator) mostly on its own.

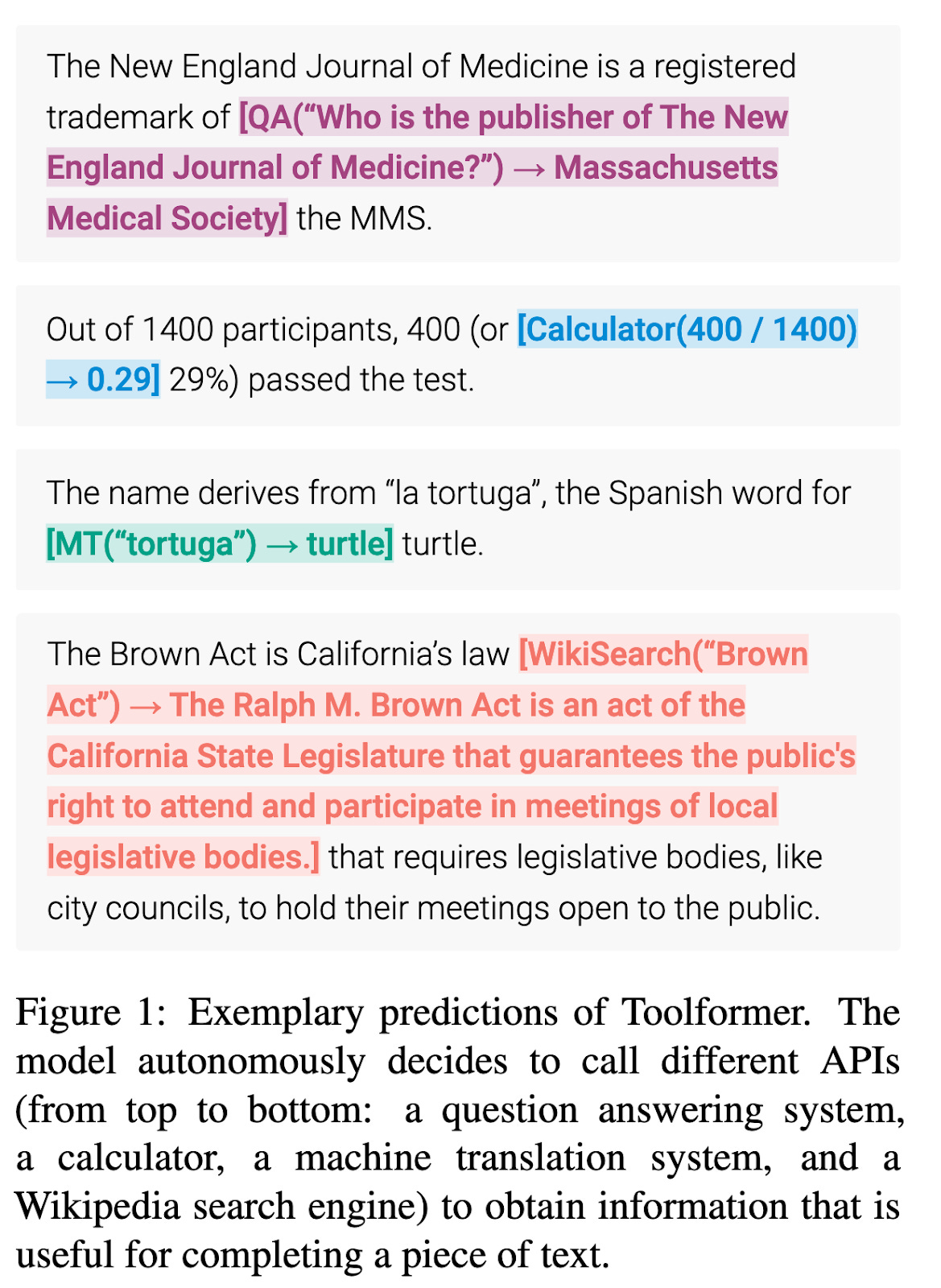

Here are some examples of Toolformer using four kinds of tools:

Not only does Toolformer have to independently learn when it should delegate something to an external tool, such as an API, but it also figures out how to use the tool. This is important because hand-crafting instructions for how and when the AI should use lots of different tools would be impractical, and because such instructions aren't necessary since Toolformer can improve how and when it uses tools on its own. Here’s how it works:

Toolformer needs to see a few examples of how a tool should be used to help it get started. The examples are hand-labeled instances of how Toolformer might use one of the tools (e.g., a calculator or Google-searcher). The researchers then instruct Toolformer to annotate a huge language dataset with where it thinks a tool could be used. For instance, the dataset may contain “The capital of New Hampshire is Concord,” so Toolformer would annotate it like this: “The capital of New Hampshire is [QA(“What’s the capital of New Hampshire?”)] Concord”.

Here’s a hand-labeled example of a QA tool and a prompt to generate more such examples:

The provided examples make it clear how to use an API, but they’re less clear about when to use them. This means that sometimes the LLM generates an API annotation that isn’t really helpful for predicting the next token. For instance, it might generate “The capital losses were [QA(“What’s the capital of losses?”)] debilitating,” which doesn’t make sense in this context. Toolformer can be fine-tuned to learn when it should use the QA tool by comparing the actual next token, “debilitating,” with the response from the QA tool. If the tool’s response is the same as the next token, then using the tool made sense in that instance.

Here’s a diagram of how Toolformer generates API calls and how it filters the good API calls from the bad:

Toolformer’s API-calling ability substantially improves its problem-solving ability. Toolformer can compete with much larger models when it comes to problem solving, and its tool usage doesn’t reduce its core language modeling ability.

Paper: ReAct: Synergizing Reasoning and Acting in Language Models

Summary by Tyler Neylon in cahoots with GPT-3.5-turbo

This article talks about a new method called ReAct that helps large language models make better decisions by combining reasoning and task-specific actions. It's like cooking a dish in the kitchen - between each action, we might use language to track progress, adjust the plan, or search for information. ReAct does something similar by using reasoning to figure out what actions to take and using actions to gather more information. The authors tested ReAct on different tasks like answering questions and making decisions in a game, and it worked better than other methods. Overall, ReAct helps language models make better decisions and be more understandable to humans.

This figure from the paper illustrates two open-ended tasks that we might ask an agent to solve, along with different approaches to help an AI agent iteratively solve those tasks. Notice how the ReAct framework, in the right column, provides a structured method of reasoning with observations for the LLM to work with:

The authors of the paper conducted experiments to compare the ReAct method to alternatives. They wanted to see if ReAct could improve the performance of language models on tasks like answering complex questions or making decisions in interactive environments. They found that ReAct was better than other methods because it could overcome issues like making mistakes or getting confused during reasoning, and it could make better decisions in complex environments. To explain this, imagine you’re cooking a new dish in the kitchen. You might need to reason about what ingredients you have, what steps to take, and what to do if something goes wrong. ReAct helps language models do this kind of reasoning, making the language model better at solving tasks and making decisions, like a chef who can reason about cooking while also taking actions like chopping vegetables or turning on the stove. The authors also found that ReAct generates more interpretable decision traces, which means it's easier for humans to understand how the language model is making decisions.