I have seen the future of image-generating AI

And it's built with structured control

As an egregious oversimplification, we can split modern image-creation tools into two buckets: old-school (pre-AI) Photoshop and DALL•E. A Photoshop file is precision-structured. You have layers, channels, masks, gradients, brushes, and three hundred features I don’t even know about. When you move over to DALL•E, you’ve got one unstructured text blob, and a pile of pixels.

From the user-interaction perspective, these are polar opposites. Essentially everything you do in Photoshop is well-defined and more-or-less deterministic. On the other hand, the main interaction with DALL•E is to … edit your prompt. You can add the phrase “in a bright color scheme” to your text, but there are no guarantees about what will happen. It doesn’t just change your color scheme; it probably changes everything about your image.

From one point of view, the free-form input to DALL•E is closer to the voice-driven interface of the USS Enterprise’s computer. It’s easy to use; it feels like a good default interface. But! Sometimes you want structure. So I’m making up the term “structured control,” inspired by this week’s paper. In my mind, even better than a black box built around just text is a tool that offers both the simplicity of natural language as well as the more clearly defined “structured” controls that come with traditional tools like Photoshop. When we think about the future of image generation as a product (not as a fun thing to build), I believe this is a key foundational concept.

— Tyler & Team

Paper: Composer: Creative and Controllable Image Synthesis with Composable Conditions

Summary by Adrian Wilkins-Caruana

Promptable image generation models like Stable Diffusion let you quickly generate an image with a text prompt. Using elaborate prompts is a common way to control how the generated image looks. For example, the images below were generated with the prompts “Abraham Lincoln” and “Abraham Lincoln, photorealistic, Blade Runner 2049 cyberpunk aesthetic, cinematic movie still,” respectively. But Composer (the new tool introduced in this paper) takes a different approach and instead uses image features — such as palette, semantics, or shape — to directly control the style of the image.

Composer lets you mix and match different visual aspects of one or more images. In the example below, different sets of color palettes and brightness maps are mixed to control the generated images.

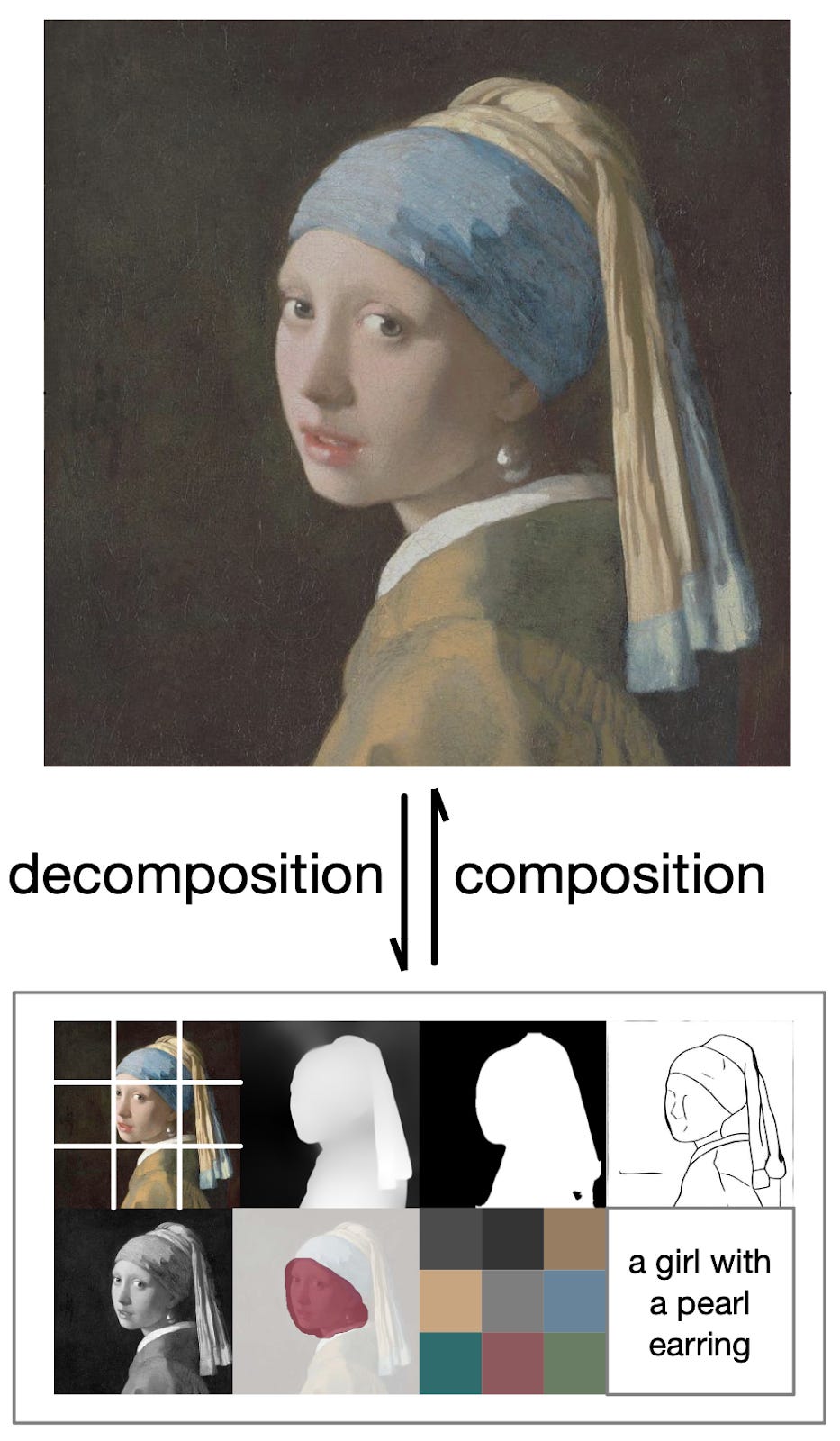

This method works by first decomposing an image into 8 features, which are extracted from the image using various computer vision and machine learning algorithms. The 8 image features are: semantics and style, depthmap, instance segments, sketch, brightness map, masking, color palette, and caption. Some of these features can be extracted with simple algorithms (like sketch, which uses edge detection), while others require more complex, task-specific neural networks (like depthmap, which uses depth-prediction models). The figure below shows an example of the image features that can be extracted from this famous painting.

Composer is trained to reconstruct images using their features, where each training step involves a decomposition and recomposition step. Some of the features contain global information, such as the text caption, the image embedding, and the color palette, while others contain localized information, like the sketch, depth maps, and brightness maps.

An issue with this setup is that Composer might come to rely on all of the image features for reconstruction, but it would be better if Composer could also reconstruct images from a subset of features (e.g., just a palette and a brightness map). To deal with this, the authors used dropout to randomly eliminate some image features during training. They chose a dropout rate of 50% for each component except for the brightness map, where they used a 70% dropout rate since it contains a lot of information about what the final image should look like.

Even though Composer is only trained to reconstruct images from their features, it can do other things too, such as perform style transfers or image interpolation. It can even restrict these operations to particular regions of an image when combined with the masking feature, as you can see below.