How to Train a Drag-GAN

Manipulating objects in static images

When I was a grad student, I worked with Professor Mehryar Mohri at New York University. One day he told me: “Machine learning is just like compression. Do you see why?”

Here’s some intuition: Pretend there’s a single best artist in the world. I hand this artist a piece of paper that says “a photo of a woman’s face,” and the artist hands me back a newly-created photo of a woman’s face. It may not be exactly the person I had in mind, but it’s still a photo of a face. In one sense, my image description is a form of lossy compression.

If I want the re-created photo to be less lossy (more accurate), I can add more text to my description. Maybe I specify that her hair is a little longer, for example. As I add text, the photo gradually converges on the exact image I am “compressing.”

In this thought experiment, my brain is like an image encoder (image-to-text) and the artist is a decoder (text-to-image); what we both share is an awareness of things that can be in photos, like faces. The best possible compression algorithm is one that deeply understands what can be in a photo — and all the little variations that are possible. We started with compression, but found ourselves in a place where the algorithm understands what’s in images as a by-product. (And, yep, this thought experiment is a lot like an autoencoder!)

Now imagine you have programmatic access to both a great writer (image-to-text) and a great artist (text-to-image). Suddenly new opportunities arise, and that’s where we seem to be right now. This week’s paper takes advantage of both sides of our thought experiment — encoder/writer and decoder/artist — with a creative and impressive technique.

— Tyler & Team

Paper: Drag Your GAN: Interactive Point-Based Manipulation on the Generative Image Manifold

Summary by Adrian Wilkins-Caruana

Imagine standing in front of the Leaning Tower of Pisa, striking the iconic pose that makes it look like you're holding up the tower with your hands. You snap the picture, eager to share this fun moment with your friends. But when you look at the photo once you’re back home from your Italian vacation, you notice that your hands were misaligned with the tower! It looks like you're swatting at a giant fly rather than propping up a historic landmark. If only there were a way to fix your photo. Enter DragGAN, an innovative method that lets you use an AI model to precisely manipulate digital images! Here’s an example showing how you can use tracking points to turn a lion’s head:

As the name suggests, DragGAN uses a Generative Adversarial Network (GAN), which is a method for training a neural network that generates things, like images. GANs are trained using a second neural network, called a discriminator, that judges how good the generative network is at producing stuff (such as, in DragGAN's case, fake images). DragGAN is actually derived from a more advanced GAN called StyleGAN, which focuses on generating high-quality and highly customizable images by learning and controlling different aspects of the image’s style, such as facial features, background, and artistic details.

The way that DragGAN uses StyleGAN to manipulate images involves two clever concepts, which the authors call motion supervision and point tracking. But before we discuss these concepts, we need to understand a bit more about StyleGAN.

In many ways, StyleGAN is like a big mathematical cake. This cake is square and has many layers. The bottom layer (the image) is large, thin, and crumby: an array of millions of numbers that describes the color at every point in the image. Above the image base is the first feature-map layer, which is a bit thicker and is also composed of numbers. But, rather than describing color, each of the numbers in this layer contains information describing other aspects of the part of the image directly beneath it, like edges, textures, and patterns. The cake has several more of these feature-map layers, each progressively thicker, and each with numbers representing more and more abstract stuff about the part of the image beneath, like "nose" or "pencil." Of course, the cake wouldn't be complete without a cherry on top: a latent code, which is a pretty small vector, containing only 512 numbers. Its job is to kind of embody everything about the cake beneath. StyleGAN’s party trick is that it can use just the latent-code cherry to generate the whole cake, layer by layer, including the image at the base (kind of like a CNN-based autoencoder).

So how do we use this mathematical cake to manipulate the image? In the lion example above, we want to move the part of the crumby image base that’s at the red dot to the blue dot. So, first we slice the cake open horizontally to look at the 6th feature-map layer (I'll explain why DragGAN uses this particular layer in a moment), and we use a new method called motion supervision to nudge the features in the feature map that are directly above the red dot (a.k.a. the source point) on the base slightly in the direction of the blue dot (a.k.a. the destination point). Motion supervision directly optimizes the 512 numbers in the latent code vector such that, when the code is used as an input to StyleGAN’s image-generator, the generator’s weights will generate a feature map that reflects the nudged location of the patch near the red dot.

So far, we’ve changed the 6th feature-map layer, and determined a new latent-code cherry using motion supervision. By finding new latent code, we’ve also implicitly changed the image at the base of the cake. We can now use StyleGAN’s party trick to turn the latent code into an image and see how it’s changed.

What we should see in this new image is that the part of the image at the red dot (i.e., the lion’s nose) has moved slightly in the direction of the blue dot. But, because we shifted the 6th, somewhat abstract, somewhat realistic map of image features, it may have changed the image in ways we didn’t expect. Because we didn’t change the 6th feature map very much, the lion’s nose should be in the vicinity of where the red dot was nudged. So, we use point tracking to keep track of where the red dot actually moved by searching the neighborhood of features around the original red dot to find where the updated red dot has actually been moved. It’s okay if the red dot moved in an unexpected direction; we’ll get to how DragGAN deals with this in a moment.

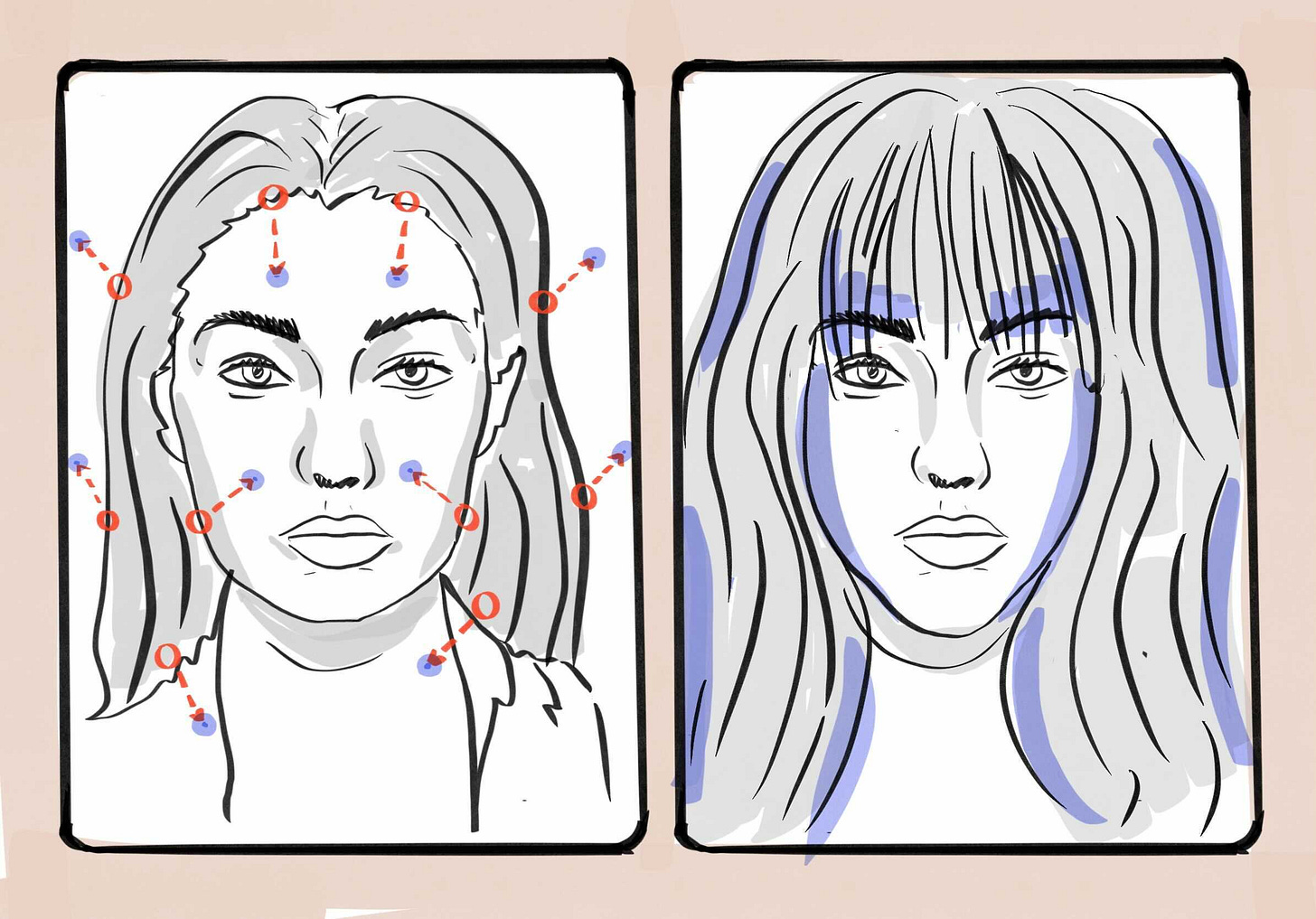

The figure below shows how DragGAN uses motion supervision and point tracking to move the red dot slightly in the direction of the blue dot.

These two steps have successfully generated a new image, with the red dot moved somewhat in the direction of the blue dot. DragGAN then repeats the motion-supervision and point-tracking steps until it’s moved the red dot all the way to the blue dot. The reason we shift the 6th feature-map layer of the StyleGAN cake is because it’s neither too thick nor too thin (remember, the layers get thicker as you go up the stack). If it were too thin, then motion supervision would just move individual pixels, which isn’t enough to rotate the lion’s entire head. If it were too thick, motion supervision could change large portions of the image in unexpected ways, like turning the lion into a giraffe.

Unfortunately, DragGAN only works on StyleGAN-generated images, not real images; in other words, you have to start with the latent code, not an image. But you can actually run DragGAN on your own images (like that Leaning Tower pic) thanks to something called GAN Inversion, which turns any image into a latent code. From there, you can run DragGAN as normal.

Unlike existing methods, DragGAN doesn’t need any annotated image-manipulation training data, and it’s much better at following user instructions. DragGAN isn’t perfect: It relies on things that StyleGAN has seen during training when manipulating images, meaning it can generate weird results if you ask it to make an arm bend in a way it’s never seen before. Nonetheless, I can see this kind of method being quite useful in general-purpose image-editing applications like Photoshop, like giving your horse some serious pizzazz.