How to make more faithful images with training captions

Paper: A Picture is Worth a Thousand Words: Principled Recaptioning Improves Image Generation

Summary by Adrian Wilkins-Caruana

A few weeks ago, we explored a paper that addressed the challenge of making text-to-image models generate high-quality images. In that paper, the researchers identified that the source of the challenge is the low-quality images that are used to train such models. They addressed this issue by fine-tuning their model with carefully chosen training images, yielding a system that can generate beautiful images with simple prompts like “orange juice,” instead of sophisticated ones like “cinematic orange juice, shallow depth of field, high contrast.” But what if the issue goes deeper than just low-quality training images? More specifically, what is the effect of mediocre image captions on a trained text-to-image model?

Text-to-image models are trained using images from the internet and their HTML alt text attribute, which is found in the website’s HTML source code; for example: <img src="cover.jpg" alt="Cover of Oregon Wine Press February 2019">. Can you see any issues with using such text to train a text-to-image model? The attribute describes what the image is instead of what’s in the image! The LAION (Large-scale Artificial Intelligence Open Network) dataset, which is used to train text-to-image models like Stable Diffusion, is riddled with such examples, like the one of “cover.jpg” below.

Researchers from Google are trying to address this problem by improving the training data. Specifically, they’re trying to recaption images from the internet using image-to-text models. Their approach is quite simple: First, they fine-tune an automatic captioning model, then they relabel the images in the training data with automatically generated captions, and finally, they fine-tune Stable Diffusion on the images and new captions.

A non-obvious step in this method is the fine-tuning of an automatic captioning model. If the model can already automatically caption an image, why does it need to be fine-tuned? The reason, as the paper’s title suggests, is that “A picture is worth a thousand words.” Images can contain a lot of information, and it could take several paragraphs to describe a complete image. So generated captions need to capture as much detail as possible in a small amount of text. The authors started with a pre-existing captioning model called PaLI (Pathways Language-Image model). Unfortunately, this model makes captions that are often lacking in detail. So the authors fine-tuned it to generate captions that describe the images in more depth. The fine-tuned model, which the authors called RECAP, can generate both short and long captions, as shown here.

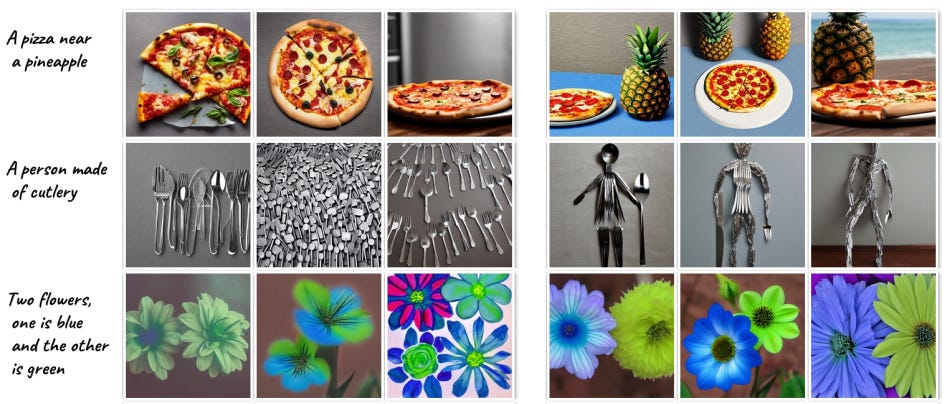

The researchers then fine-tuned Stable Diffusion on the recaptioned images. They found that using a 50-50 split of short captions and long captions (both from RECAP) yielded the best image-generation model. Compared to a model trained on alt text captions (extracted from html), the fine-tuned model using RECAP captions scored 1%-24% better across seven different metrics. Also, when given four chances to generate images from 200 random prompts, compared to using alt-text training data, the fine-tuned model was 52% more likely to have all four images follow the prompt exactly, and 40% more likely to have at least one image that followed the prompt exactly. In the examples below, you can see how the RECAP-based model (right) is better than the previous, non-RECAP-based model (left) at following the prompts.

It’s no surprise that these improved captions make for better text-to-image models, especially compared to alt text captions as a baseline. But imagine all the other ways a recaptioning model could be used to emphasize other aspects of the training images, such as their photographic style, their color palette, or the position of items in the image. These captions could be used to train text-to-image models with unique abilities. This paper’s results are a reminder that training data is often the most important part of machine learning projects — a model’s output frequently reflects its training in obvious ways, while in some cases the effects are quite subtle.