How to build a lensless camera

[Paper: DifuzCam: Replacing Camera Lens with a Mask and a Diffusion Model]

Summary by Adrian Wilkins-Caruana

Have you ever wondered why cameras have lenses? The camera on your phone and our eyes are common examples of image sensors that have lenses in front of them to focus the light. But why is the lens necessary? In other words, why can’t you just point an image sensor without a lens at something you want to take a photo of? It is actually possible to take photos without lenses, though it’s quite challenging. But with some clever computational imaging tricks and the aid of diffusion models, lensless photography is becoming more tractable.

If you’ve ever used a camera with a detachable lens, you might have some idea of what an image looks like without a lens. In case you haven’t, here’s an image I found on Reddit that shows an example. The “preview” on the right shows what the camera “sees” without its lens — it’s a blurry mess. In photography terms, the image isn’t in focus. Focus means that light from a single point in the scene goes to a single point on the image sensor; this is the critical role that lenses play. But in the real-world, light is bouncing around everywhere in all directions. Without a lens, light from lots of places is landing on every part of the sensor. That’s why the preview looks reddish: the scene is mostly reddish. So a lens plays a very important role by focussing only the light from one point in the scene onto one point on the sensor.

Lenses are a common way to focus light, but sometimes a lens doesn’t work. For example, have you ever thought about how an X-ray image is captured? X-rays have much higher energy than visible light, so they aren’t as easily deflected by glass lenses like you’d find in a camera. (You can read more about X-ray optics on Wikipedia — it’s fascinating!) So researchers are constantly looking for new ways to capture images without lenses. In 2015, a team discovered a way to capture images using a mask-sensor assembly, an image sensor that’s manufactured with a mask (which I’ll explain shortly) in front of the sensor that interferes with the incoming light. The diagram below shows how an image of a scene can be reconstructed from the blurry mess that the sensor captures.

In theory, it’s possible to model how the light in the scene interacts with the sensor, and to reconstruct an image using this model. This isn’t possible in practice though, since there are too many unknown parameters in the model. So the researchers had the clever idea of using a mask to interfere with the incoming light for particular rows and columns of the image. The pattern on the mask is actually very important: It’s the outer product of two 1d binary patterns — one for the rows, and one for the columns. That’s why it kind of looks like an irregular checkerboard pattern. This property of the mask simplifies the computational reconstruction significantly. I won’t get into the details, but with some linear algebra (read: basically magic) an image is captured.

While helpful from a computational standpoint, masking the incoming light isn’t good for capturing crisp images. But some clever researchers from Tel Aviv University had the idea of using AI to improve the image quality. The two main components of their method are a pre-trained latent diffusion model (in this instance, it’s Stable Diffusion), and a neural network called ControlNet. ControlNet guides the image-generating diffusion process for some specific task, like reconstructing images from masked, lensless photos. The control and diffusion processes can also be guided by a text prompt to further improve the reconstruction. The researchers call this process DifuzCam, and it’s shown below. (There is one more notable bit: the separable transform, which splits the single-channel input signal into four color channels.)

There are two training signals that help DifuzCam learn how to reconstruct lensless photos. The first is a simple reconstruction (l_C), which encourages the generated image to look like the target image. The researchers call the other signal a separable reconstruction loss, which is just the L2 loss between the target image and the result of a learned 3x3 convolution on the color-separated input. The ControlNet’s parameters are trained via supervised learning using a dataset of lensless photos and regular ones.

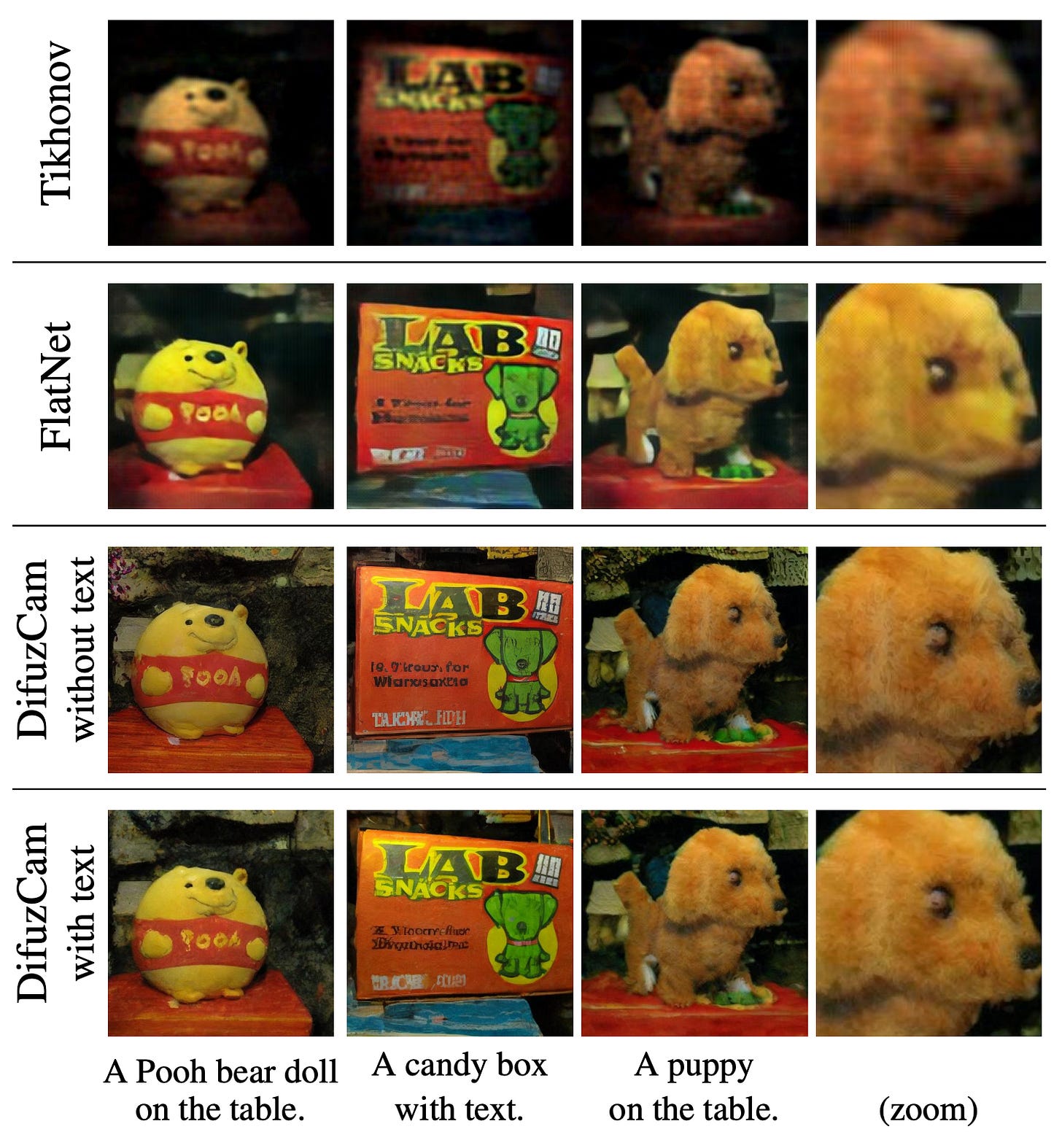

The figure below shows some of DifuzCam’s results, both with and without text guidance. It clearly generates crisper images than some of the other methods shown, and also has fewer artifacts when you zoom in. The results are a massive improvement over the simpler, original reconstruction method I described earlier. The DifuzCam process could pave the way for very thin cameras, ones could be used in phones or other situations where they need to be tiny. Of course, the tradeoff is that the images need to be processed by a hefty neural network. Still, I think these results are quite remarkable!