How LLMs redefine the workforce

Is your job obsolete, or just easier?

I have to be honest, amidst the hoopla around LLMs, I’m not yet sure if they’re overhyped, underhyped, or just hyped. I’m convinced they’re a big deal, but I’m not sure if their true impact will be big in one year, or in twenty. As Niels Bohr noted, the future is a particularly tricky thing to predict.

At the end of March, four researchers — three of them right out of OpenAI — released a 35-page research paper studying the impact of GPT models on the U.S. labor market. The title of this paper begins “GPTs are GPTs…”, the first of these meaning AIs (as in Generative Pre-trained Transformer models), and the latter indicating General-Purpose Technologies, as in a technology that affects an entire economy, like electricity.

The upshot is that a veritable smorgasbord of jobs will be, in the terminology of the paper, “exposed” to new AI capabilities. I’d personally phrase this as saying that an AI can help you with your job. If that’s good or bad for you is tricky to tell from the paper. For example, if the only thing you do is type out insanely predictable text, you might be in trouble. On the other hand, if 5% of your time involves reading boring text that you’d love summarized, your job is probably safe and about to get easier.

The paper makes things a lot more interesting by distinguishing between direct LLM usage and indirect (which it calls LLM+ exposure), with “indirect” meaning that your job is easier not because you’re using an LLM, but because you’re using something built on top of an LLM. In general, the authors have examined large-scale job impact from a variety of fascinating perspectives.

— Tyler & Team

Paper: GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models

Summary by Adrian Wilkins-Caruana

To what extent will AI systems such as GPT impact our work routines? Can they be considered general-purpose technologies like electricity, computers, or the internet? According to Eloundou et al., 15% of day-to-day worker tasks can be completed significantly faster and at the same level of quality using GPT-3, and presumably even more quickly with GPT's newer variants. This figure jumps to 50% when you include software and tooling built on top of these large language models (LLMs). Due to their versatile task-level abilities, LLMs hold the potential to influence many jobs in the U.S. economy, much like other general-purpose technologies have.

To measure the effect that LLMs will have, the authors used the O*NET database, which contains information on ~1k occupations and ~19k tasks, like this one for a computer systems engineer: “Monitor system operation to detect potential problems.” The authors manually annotated each task using a novel rubric to assess their estimated level of exposure to LLM-based automation. “Exposure” means that an LLM can significantly reduce the time it takes to complete a task at the same level of quality as a human. The various levels are: no exposure, direct exposure (LLMs can automate this task), and LLM+ exposure (LLMs can perform this task if supplemented with additional software).

The authors and GPT-4 each annotated how exposed they predict the tasks are to GPT-3 automation according to this rubric. Both sets of annotations were similar but, interestingly, people were more likely to rate some jobs as highly exposed. The researchers then combined these task-level exposures at an occupation-level to measure its exposure.

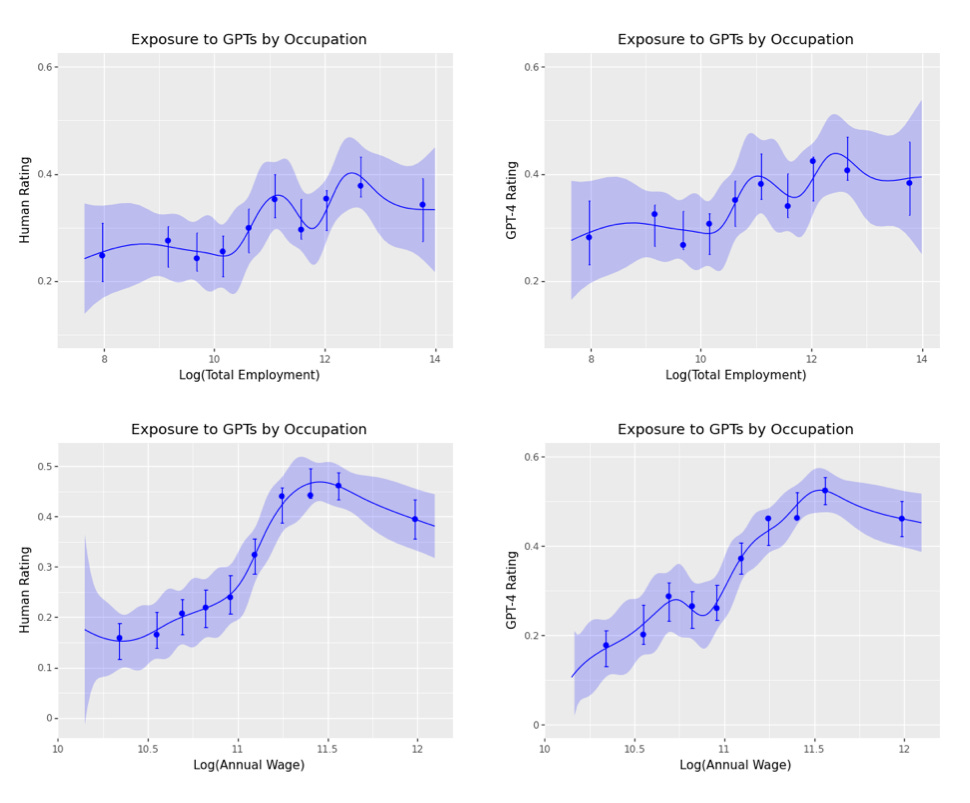

This figure shows various occupations’ exposure to LLMs as assessed by people (left) and GPT-4 (right). The y-axis is the percentage of tasks in an occupation that are LLM exposed. In the top row, the x-axis represents uncommon vs. common jobs, such as rockstars (lower total employment) and nurses (higher total employment). In the bottom row, the x-axis represents the occupations’ log of annual income. Overall, it shows that more common jobs are slightly more exposed, and that exposure increases with wages.

The next figure is a bit hard to decipher, but it summarizes the exposure across the economy: The lower and farther to the left the curve is, the less exposed the economy is. The green-circled data point indicates that, according to annotations made by people, at least 60% of tasks in 50% of jobs are exposed to LLM+ automation. The red / blue circles indicate LLM exposure, and the red / blue triangles indicate LLM+ exposure. The blue curves show exposure as judged by people, and red curves show exposure as judged by GPT-4. The key takeaway is that the economy is already moderately exposed to LLM automation, and that exposure significantly increases with LLM+ automation!

Eloundou et al.’s findings indicate that a significant proportion of the U.S. economy is already exposed to automation from GPT-3 or other software that might use GPT-3, and newer models will increase this exposure. If GPT’s are truly general-purpose technologies, then the workforce will have to adapt to new ways of working. We’ve done it before with the advent of electricity, computers, and the internet – can we do it again?