Fonts that tell a story

A fontastic new AI framework designs letters to fit any theme.

Years ago, I attended a talk by Douglas Hofstadter about automatically generated fonts. I was impressed that he’d been able to execute on the project at all — although the results at the time were underwhelming. It was obvious that human-designed fonts were far superior on all counts: legibility, well-executed theme, and elegance. Below is a selection of generated fonts from Hofstadter’s book, Fluid Concepts and Creative Analogies:

This week’s paper is, in my opinion, several levels beyond this earlier style of generation. The paper’s framework produces custom lettering that is both easy to read as well as clearly based on whatever theme you’ve thrown at it. Check out the sample images below. That’s a lot of progress!

— Tyler & Team

Paper: Word-As-Image for Semantic Typography

Summary by Adrian Wilkins-Caruana

A “word-as-image” is an illustration where a word’s meaning is represented using graphical elements of its letters. Creating these illustrations is challenging, since it requires the ability to understand the semantic meaning behind the word and to convey it clearly without affecting legibility. Iluz et al. show how large language-vision models can generate word-as-image illustrations automatically. Here are some examples:

The method, which is applied independently to each letter in the word, works by changing the geometry of the letter to reflect the meaning of the word while still preserving the letter’s original shape and style. For example, if the letter is “S” and the context is “surfing,” then their method will iteratively adjust the shape of the letter “S” until the modified shape scores well across three different objectives (more on these in a sec).

Here are more examples. Notice how the modified letters match the word’s font, and how only some of the word’s letters were modified:

The first objective uses Stable Diffusion (SD) to score how much the modified letter resembles the word’s concept using a process called Score Distillation Sampling (SDS). SDS is a way to find a set of input parameters, such as the shape and geometry of a letter, that are the ideal input for optimizing a specific task. At each training step, the letter’s geometry is slightly changed. Then, some noise is added to an image of the new letter, and SD tries to predict that noise based on a text prompt that provides some context as to what should be obfuscated by the noise, such as “surfing minimal flat 2d vector.” If SD is predicting the noise well, then that means the modified letter “S” now looks like “surfing”!

The other two objectives both relate to preserving the overall shape of the augmented letter and the original letter. One objective compares blurred versions of the letters, which allows fine details in the augmented shape to change while the overall shape remains the same. The other objective compares the geometry of the shapes, so that the structure and angles of the shape don’t change too much. These two pictures show how blurred versions of the letters are compared (top) and how the letters’ geometry are compared (bottom).

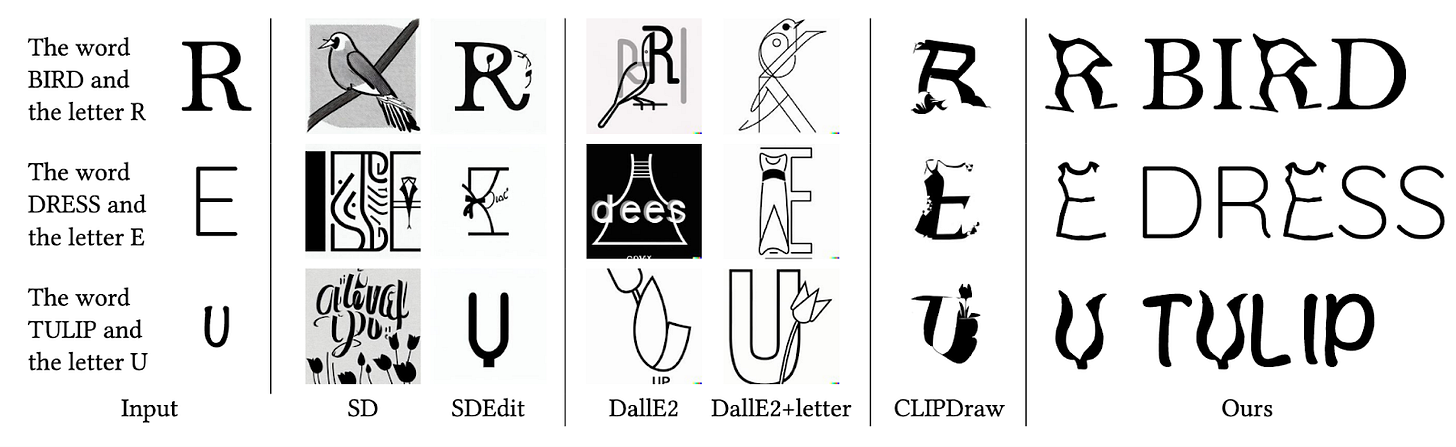

Other methods can generate joint image-letter graphics, but this method is the best at generating letters that simultaneously resemble the word’s concept as well as the letter’s shape and style.

If you get a kick out of the combination of fonts and algorithms (or math!), you’ll probably also enjoy this classic 2014 paper by Demaine & Demaine (“Fun with Fonts: Algorithmic Typography”). Here’s a snippet to get a sense for the kind of shenanigans going on over there: