Can a fine-tuned LLM create good music?

[Paper: ChatMusician: Understanding and Generating Music Intrinsically with LLM]

Summary by Adrian Wilkins-Caruana

If you’re a regular ChatGPT user, you’ve probably noticed that, capable as ChatGPT may be, it struggles with some tasks. For example, if you asked it to compose a rap song for you, it might spit out some impressive rhymes in a typical verse-chorus song structure, but not an actual song. Wouldn’t it be cool if LLMs like ChatGPT could generate songs in a more complete sense, with information about tempo, chords, melodies, structure, and motifs? A new model called ChatMusician can do just that, as the open-source research community Multimodal Art Projection (M-A-P) announced in this week’s paper.

When I think of audio-based neural networks (NN), I immediately think of bespoke NN architectures that are tailored to address the specific challenges of audio signals. But ChatMusician doesn’t use a bespoke architecture; it’s just a fine-tuned version of Llama 2. Despite its standard design, ChatMusician can learn about music by leveraging a music-specific format called ABC notation. This format is a text-based, shorthand way to write a piece of music. The figure below (from Wikipedia) shows ABC notation and the corresponding staff notation for the same song.

To teach ChatMusician about music, the M-A-P researchers curated their own dataset to continue training Llama 2. They included the following categories of text in their training corpus:

General music corpera, which are text documents containing music terminology.

Instruction and chat data to help Llama 2 learn how to chat and answer questions.

Music knowledge and music summaries, which are summaries of the metadata from 2 million songs from YouTube, and music knowledge QA pairs that are generated from these summaries using LLMs.

Math and code data, which the researchers think will aid ChatMusician with symbolic reasoning of music scores.

In addition, the researchers also curated a music theory dataset called MusicTheoryBench. To do that, they hired a college-level music teacher to create 372 multiple-choice questions (each with 4 choices) about music knowledge and reasoning. (If a question included music notation, the authors converted it into ABC notation.) The figure below shows examples of these knowledge (top) and reasoning (bottom) questions.

The researchers used these questions to evaluate several ChatMusician variants’ musical understanding against GPT3.5, GPT4, and Llama 2. I think there are two main takeaways from their results, which you can see below: First, GPT4 knows a lot about music, but ChatMusician’s music-specific training makes it more effective at musical reasoning than Llama 2. Second, music reasoning is hard: All the models score about as good as random guessing, though the ChatMusician models perform marginally better than that.

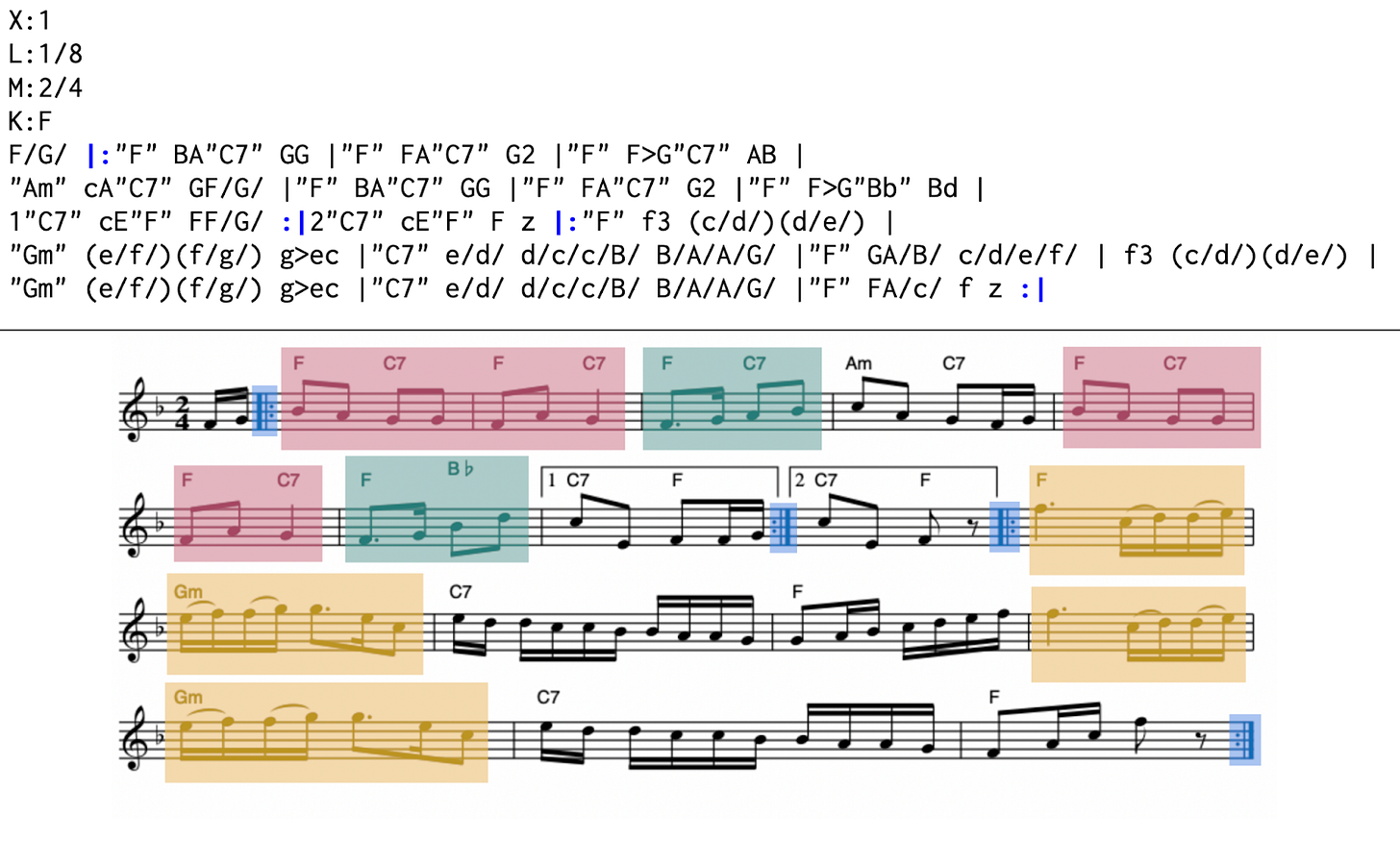

Beyond quantitative results, the researchers also demonstrate how ChatMusician can generate music. The figure below shows the ABC notation (top) and the corresponding staff notation (bottom) of a song created by ChatMusician. We can see some of the key features of ABC notation, such as the “|:” and “:|” repetition symbols (blue), as well as repetition and motifs in the colored sections. (Red blocks are one motif, yellow are another, and green blocks represent variation on the preceding motif. I think these colors represent analysis by a human of the model-created song.)

The researchers conducted several other experiments, such as using ChatMusician to analyze the compression ratio of ABC notation with other music data formats (e.g., MIDI and WAV), exploring the use of few-shot learning with GPT4 on music knowledge and reasoning, and a study where participants were asked whether they prefer the music generated by GPT4, ChatMusician, or an actual song. In the last experiment, people preferred ChatMusician 76% of the time versus GPT4’s 44% when compared to the actual song.

Despite the impressive results of their research, I think this represents just the tip of the iceberg for musical LLMs. The approach they used to craft MusicTheoryBench could be extended to create more training data to make an even better musical LLM. These models could open up a new kind of laboratory for musical experimentation. Perhaps one day musicians, chatting with future music-generation models, will have powerful new tools at their disposal.