A leap forward for text-to-3d generation

Paper: DreamCraft3D: Hierarchical 3D Generation with Bootstrapped Diffusion Prior

Summary by Adrian Wilkins-Caruana and Tyler Neylon

Have you ever thought about how an animation studio like Pixar turns an idea for a film into a movie like Toy Story? For example, how did they go from the idea of the character “Woody” into Woody as he appears in the film? What should his hat look like? How big should his grin be? What shape should his head be?

The Woody that we’re all familiar with is the result of many designers — 3d modelers, animators, writers, and artists who were each responsible for aspects of his appearance. That let each team focus on their particular specialty. Today’s paper presents DreamCraft3D, a method that follows this same idea: Use several specialized machine learning models and compose them to generate high-fidelity 3d content from an idea captured by text. For example, here’s a cyberpunk cat generated by DreamCraft3D from a text prompt.

The authors of this paper drew inspiration from a manual artistic process for generating such 3d models. The first step of the manual process is to draw up an idea as a rough 2d sketch. Then, sculpt a rough 3d geometry from the sketch. Finally, refine the geometry’s shape and texture to produce a high-fidelity model. DreamCraft3D follows the same process. First, a pre-existing text-to-image model produces an image of the idea. Then, the geometry sculpting stage determines a rough 3d model from the generated image. Finally, the texture boosting stage refines the rough geometry and generates high-fidelity textures — images that are painted onto the 3d surfaces. The geometry sculpting and texture boosting processes are the key contributions of this paper.

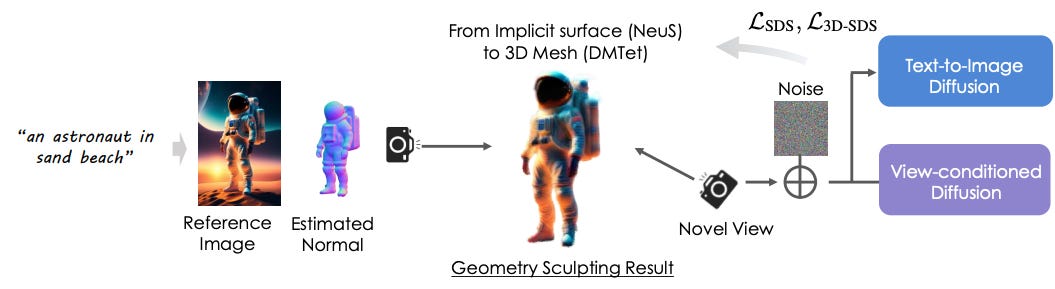

In the geometry sculpting stage, several different techniques and loss functions generate a rough 3d model from the generated image. In this stage, an implicit, neural representation of the scene depicted by the image is optimized using several loss functions that tell the neural network how well it’s reconstructing the color, depth, and geometry of the image.

At this point, the authors do something clever, which is to invent a new kind of loss function to help guide the 3d model to both match their 2d image and to look good and match the text from new viewpoints. They achieve this via a technique called score distillation sampling (SDS), which uses multiple camera angles of the 3d model to render different 2d images, and another pre-existing model judges the alignment of these 2d images against the input text. The authors also mapped their model from an implicit representation to a 3d mesh using a technique called DMTet (Differentiable Marching Tetrahedra) so that their final output is a traditional object mesh. The combination of these steps is what they call the geometry sculpting stage, which results in a first draft of a 3d object, as shown here:

The geometry sculpting stage is good at making a high-quality 3d shape, but the textures (the images painted onto the 3d surfaces) remain quite blurry since, for efficiency, the 2d renderings used in the previous stage’s SDS loss computation are only small, 64x64 pixel images. So the researchers added another step to improve the 3d mesh and optimize the textures: A model called DreamBooth uses a few of the geometry-sculpted views of the subject and a text prompt to generate higher-fidelity views. They then used these views to provide an even better loss function for the SDS process, so that the shape and textures of the model can be further improved. The authors call this overall process bootstrapped score distillation, since the diffusion model (DreamBooth) and the implicit 3d model are both iteratively optimized. The intuition is that the 3d scene representation and the model’s understanding of how to generate images of the scene help each other get better. The texture boosting result is shown below.

On a dataset of 300 2d-to-3d examples, and compared to two other 2d-to-3d models (Make-it-3D and Magic123), DreamCraft3D consistently scored the best across four diverse metrics (CLIP, Contextual, PSNR, and LPIPS). But, those results don’t capture the significant improvement in visual fidelity of DreamCraft3D. A subsequent user study established that 32 participants preferred DreamCraft3D’s generated models 92% of the time. And, from the examples below, it’s pretty clear that this method generates much more visually appealing 3d models. With results like these, perhaps DreamCraft3D will be the next member of Pixar’s animation team! 🤔